Incorporating AI Without Crashing

A Practical Roadmap for Existing Teams

If you want to add AI to your existing workflow, the worst place to start is with production code.

If your organization does not already execute a fully automated quality process — one that supports continuous delivery with very small, very frequent changes and fast feedback all the way to production — AI-assisted coding will amplify your problems, not solve them.

This isn’t a judgment; it’s systems thinking.

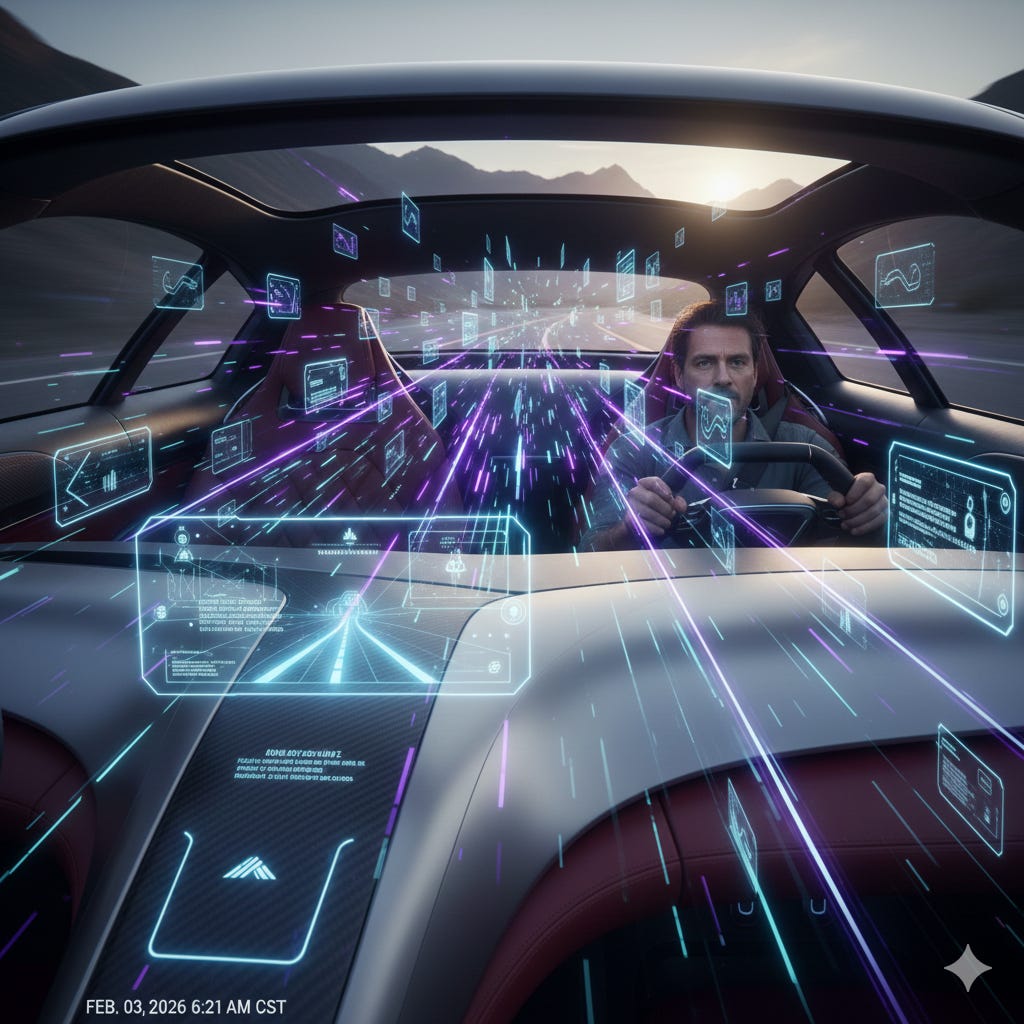

Asking an AI to write code for a weak delivery system is like putting a bigger engine in a car with no way to steer or stop. You will go faster, briefly, and then something expensive will happen.

So where should you start?

Use agents to help you fix the problems that prevent you from being “good” at delivery in the first place.

This is the pragmatic roadmap I recommend for teams that want real value sooner and more safely, using AI without YOLO-ing garbage into production.

AI as Organizational Chaos Testing

I recently told Charity Majors that introducing AI into an organization is effectively chaos testing for the organization itself. It is the fastest way I’ve seen to surface systemic weaknesses that were already there — unclear requirements, weak feedback loops, brittle quality processes, and governance theater that collapses under real pressure.

Chaos engineering of systems does this intentionally by injecting failure to reveal hidden coupling and fragility.

AI does the same thing — unintentionally, but relentlessly.

When you add AI to development workflows:

Vague requirements fail faster

Missing tests are exposed immediately

Weak guardrails get bypassed

Slow feedback loops become painfully obvious

The AI didn’t create those problems. It just removed the illusion that they weren’t there.

This is why so many organizations conclude that “AI doesn’t work for us.” What they’ve really learned is that their delivery system only functioned because humans were compensating for broken processes with experience, heroics, and institutional knowledge.

You can use AI as a diagnostic tool to surface these, but I wouldn’t suggest it. I’d suggest assuming you have these problems unless you are already shipping to production daily with low defect rates.

So, where SHOULD we start?

Step 1: Start With Good Tools

This part is obvious to anyone who’s struggled with Temu grade tools or tried to use a slotted screwdriver to drive a Phillips screw. When I’m focused on woodworking projects, I use the best tools I can afford because accuracy produces less waste and frustration than the false economy of inexpensive tools.

For work (as of today), I primarily lean on Claude and Gemini. Not because they are magical, but because they are the best models I can find right now; weaker models are less capable, less accurate, and cause more rework. Hallucinations, shallow reasoning, and inconsistent outputs all add friction to feedback loops.

A better model reduces the time you spend debugging the assistant rather than the problem.

Step 2: Clarify the Work Before Anyone Codes

The lowest-risk, highest-return use of AI is feedback on written intent.

Before code exists, you already have artifacts:

Requirements

User stories

Acceptance criteria

Feature descriptions

Tickets, documents, or whatever your organization calls “the thing we think we want.”

These artifacts are usually defective in some way; incomplete, ambiguous, or inconsistent.

AI is very good at spotting gaps:

Missing edge cases

Conflicting statements

Undefined terms

Assumptions masquerading as facts

Requirements that can’t be tested

Ask the model questions like:

“What would be hard to test about this?”

“What assumptions are being made here?”

“What information would a developer need that isn’t stated?”

“What could a user reasonably expect that isn’t covered?”

A recent example from my real work is using Claude to review over 10k incident tickets from the past few years to identify patterns and prioritize what to address and in what order, based on the cost of the incident type. That would have taken weeks of work for humans to assemble the data i na consumable way, but less makes sense of it. It took an afternoon to plug into Jira, read the recodes, and create a professionally formatted report with roadmap suggestions and examples of specific issues, including where they exist in the code.

Another example was analyzing new compliance rules for contradictions, gaps, or untestable rules, and then giving those documentation defects back to Compliance to correct them before feeding the bad input into the development flow.

If you only used AI for this step, you would already see a meaningful improvement. Better inputs lead to smaller changes, fewer rework loops, and higher confidence downstream.

These are things that most teams skip because of the time and effort required. Instead, they try to fill in the blanks or misunderstand the intent, resulting in rework.

Step 3: Harden Your Guardrails

Once the intent is clarified, the next priority is making it harder to make mistakes.

Most teams think of quality guardrails as “tests.” That’s necessary but insufficient.

You need two classes of guardrails:

1. Product and Operational Guardrails

These answer:

Does the code behave as defined?

Does it run correctly in the real target environment?

Does it meet security, performance, reliability, and compliance expectations?

This is the familiar CI/CD conversation: automated tests, deterministic pipelines, repeatable deployments, and fast feedback.

If this isn’t already automated, AI will not fix it. It will expose it.

2. Development Guardrails

This is where newer opportunities exist.

Beyond linting and formatting, we can now ask:

Is the code idiomatic for this language?

Is it structured in a maintainable way?

Are names meaningful?

Are responsibilities well-separated?

Does the design drift from established patterns?

Is the code even testable in its current form?

These are things senior developers try to catch in code review… inconsistently and expensively.

As more of these validations are automated, it becomes more difficult for variants to escape developers’ IDEs, let alone reach prod. These standards are enforced continuously, without social friction, and without waiting for review meetings.

If your process makes it difficult to introduce bad structure or bad design, AI-assisted coding becomes dramatically safer later.

Step 4: Make Delivery Easier, Not Faster

At this point, many teams make a mistake: they try to “speed things up.”

Don’t.

The next goal is to remove friction from delivery:

Manual approvals

Fragile environments

Long-running branches

Slow builds

Painful deployments

AI can help here by suggesting automation ideas, identifying tooling you didn’t know existed, or helping prototype improvements to pipelines and workflows.

I’m frequently surprised by how many mature tools exist that teams simply haven’t heard of. Asking AI for options — not solutions — is a good way to expand your design space without committing to anything.

The objective is simple:

Make the right thing easy and the wrong thing hard.

Don’t focus on speed. Focus on friction and motion reduction. Slow is smooth and smooth is fast. When delivery is boring, speed is a natural outcome.

Step 5: Then — and Only Then — Accelerate

Only after the previous steps are in place does it make sense to lean on AI for code generation in any serious way.

At that point:

Requirements are clearer

Tests are defined

Guardrails are strong

Pipelines give fast feedback

Changes are small

Now, AI becomes a force multiplier instead of a liability. Now you’re only limited by your imagination and your fear.

It doesn’t matter whether the code was written by a human or a machine if:

The outcome is deterministic

The behavior is validated

The change is reversible

The blast radius is small

That’s what continuous delivery was always about. AI doesn’t change the rules; it just makes the lack of rigor more obvious.

The Roadmap, Condensed

The roadmap is intentionally boring:

Clarify the work to be done

Make it harder to make mistakes while doing that work

Make it easier to deliver the work

Then accelerate the work

This order matters.

Skip steps, and you’ll still ship faster, but…

Final Thought

This recommendation comes from someone with far too many years of on-call experience to romanticize failure.

AI is not reckless. People are.

Used well, agents help you see your system more clearly and improve it faster. Used poorly, they simply help you dig holes at scale.

Choose boring. Choose discipline. Then let the machines help you move faster.

Super practical advice here - which I love. I think the reason that many people aren’t sure how to get started with AI or where to apply it is because they aren’t given really practical, tactical advice. This is great!